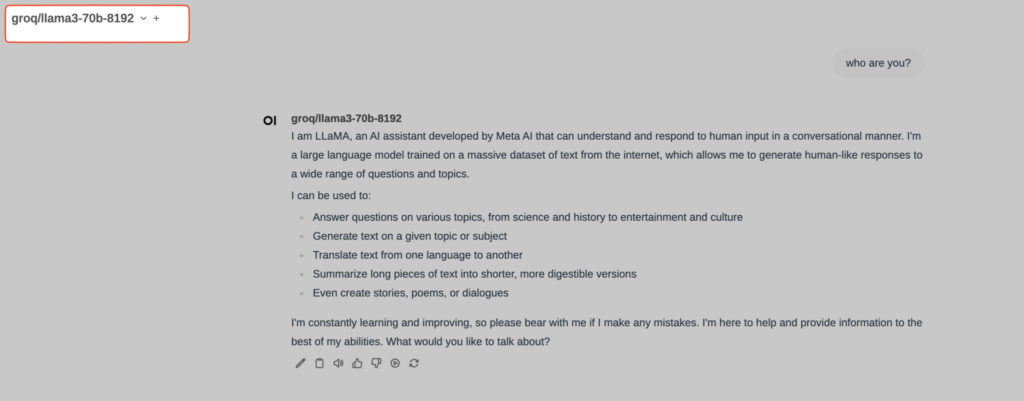

LiteLLM is a package that simplifies API calls to various LLM (Large Language Model) providers, such as Azure, Anthropic, OpenAI, Cohere, and Replicate, allowing calls to be made in a consistent format similar to the OpenAI API. Integrated with OpenWebUI, it allows this user interface to work with any LLM provider. In my case, I use OpenWebUI+LiteLLM to query groq/llama3-70b, Anthropic Claude Opus 3, GPT-4o, and Gemini-1.5-pro, among others.

The problem is that the 0.2.0 release of OpenWebUI stopped integrating a version of LiteLLM. That doesn’t mean it can’t be used; rather, LiteLLM needs to be launched separately, through a Docker container, for example.

Let’s launch LiteLLM:

$ docker run -d --name litellm -v $(pwd)/config.yaml:/app/config.yaml -p 4000:4000 -e LITELLM_MASTER_KEY=sk-12345 ghcr.io/berriai/litellm:main-latest --config /app/config.yaml --detailed_debug

That command launches LiteLLM listening on port 4000, using config.yaml as the configuration file. The config.yaml file might look something like this (don’t forget to input your API Keys):

general_settings: {}

litellm_settings: {}

model_list:

- model_name: claude-3-opus-20240229 # user-facing model alias

litellm_params:

api_base: https://api.anthropic.com/v1/messages

api_key: sk-

model: claude-3-opus-20240229

- model_name: gpt-4-turbo

litellm_params:

api_base: https://api.openai.com/v1

api_key: sk-

model: gpt-4-turbo

- model_name: gpt-4-vision-preview

litellm_params:

api_base: https://api.openai.com/v1

api_key: sk-

model: gpt-4-vision-preview

- model_name: gpt-4o

litellm_params:

api_base: https://api.openai.com/v1

api_key: sk-

model: gpt-4o-2024-05-13

- model_name: gpt-3.5-turbo

litellm_params:

api_base: https://api.openai.com/v1

api_key: sk-

model: gpt-3.5-turbo

- model_name: gemini-1.5-pro

litellm_params:

api_key: AI-

model: gemini/gemini-1.5-pro

- model_name: groq/llama3-70b-8192

litellm_params:

api_key: gsk_

model: groq/llama3-70b-8192

router_settings: {}

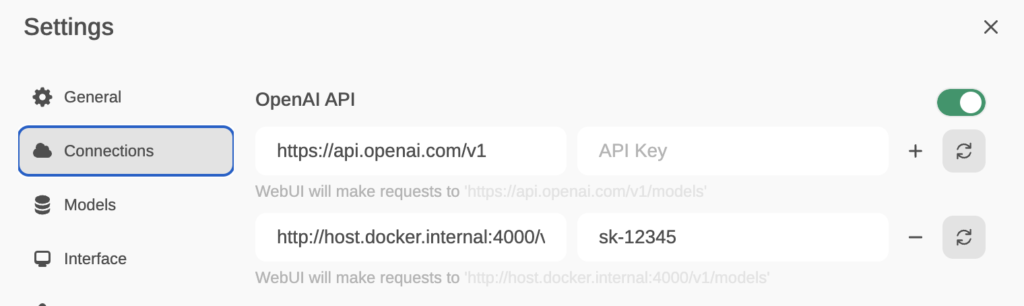

Now, from OpenWebUI, go to Settings/Connections and enter http://host.docker.internal:4000/v1 in the host field and sk-12345 (or the key you set for LiteLLM when you launched it via Docker) in the API-Key field.